The process of learning any new skill, like riding a bike, takes a lot of time and effort, many days or weeks. But once your brain has learnt all the tiny corrections-to-balance that it needs, you can ride any bike (even a brand new one) almost straight away.

The same is true when machines learn, Machine learning is also a very imbalanced process, the learning (training) of the model takes a long time and a lot of computing power as a process of optimisation finds the best values for millions of different values (weights and biases) within the artificial neural-network. But once trained, the calculations for the prediction (inference) process takes much less effort and can be performed in real-time on a relatively modest computer, small enough and cheap enough to be duplicated many times, near to the sites of the problem being solved.

Edge computing distributes the computing processes to multiple smaller, cheaper units and permits data storage and computation to be performed closer to the source of data. This allows devices to process data quickly and act on it at the network’s edge, without the latency of communicating large amounts of data over a network.

Edge computing can improve response times for remote devices and smart sensors and provide more timely insights by minimizing the amount of data that needs to be transmitted to a central datacentre.

Even so, calculating an inference requires many matrix addition and multiplications, and it is only in the last decade that small micro-processor CPUs have become fast enough to undertake this task. The addition of a low power dedicated GPU, designed specifically for fast matrix operations, has also greatly improved the scope of performing inference on small computers at the edge of the system.

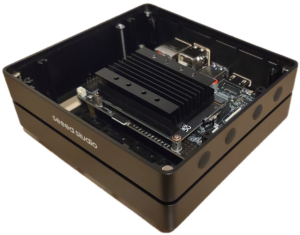

The platform this project is built on is the Seeed Studio reComputer J1010 NVIDIA Jetson Nano 2GB Platform with the Arm Cortex A57 CPU and NVIDIA Maxwell GPU

The Arm Cortex A57 CPU operates at 1.43 GHz and runs the Ubuntu 18 Linux operating system with the NVIDIA Jetpack SDK installed, which supplies the Docker environment for the Python programming language and the pre-trained neural-network models. The NVIDIA Maxwell GPU provides 128 cores of CUDA compute power for the machine learning to utilise.

A guide to unpacking and setting up the hardware for this demonstration is available here,

00 Setting up the Demonstration Hardware_Guide [ ⇓ ]

Further advanced technical detail on how to develop the hardware used for this demonstration project are available in the project github repository [ ↵ ]

Technical details on the hardware architecture of the reComputer J1010 are available from the Seeed Studios website [ ↵ ]

Details on installing and using the NVIDIA Jetpack SDK on the system are available from the NVIDIA DLI course [ ↵ ]

Quick Shortcuts to project resources:

a glossary of the highlighted technical terms used can be downloaded here [ ⇓ ]

download the eBooklet [ ⇓ ], a digital copy of the printed project booklet